(November 2025) We are recruiting PhD students for Fall 2026

We are looking for highly motivated students interested in the intersection of Ubiquitous Computing and Health to join our in 2026, with a focus on Machine Learning for human behaviors.

continue reading...

UbiWell Lab

The Ubiquitous Computing for Health and Well-being (UbiWell) Lab is an interdisciplinary research group at the Khoury College of Computer Sciences and the Bouvé College of Health Sciences at Northeastern University.

We work on developing data-driven solutions to enable effective sensing and interventions for mental- and behavioral-health outcomes with mobile and ubiquitous technologies.

Research Areas

Our interdisciplinary team works at the intersection of mobile/wearable sensing, data science, human-centered computing, and behavioral science.

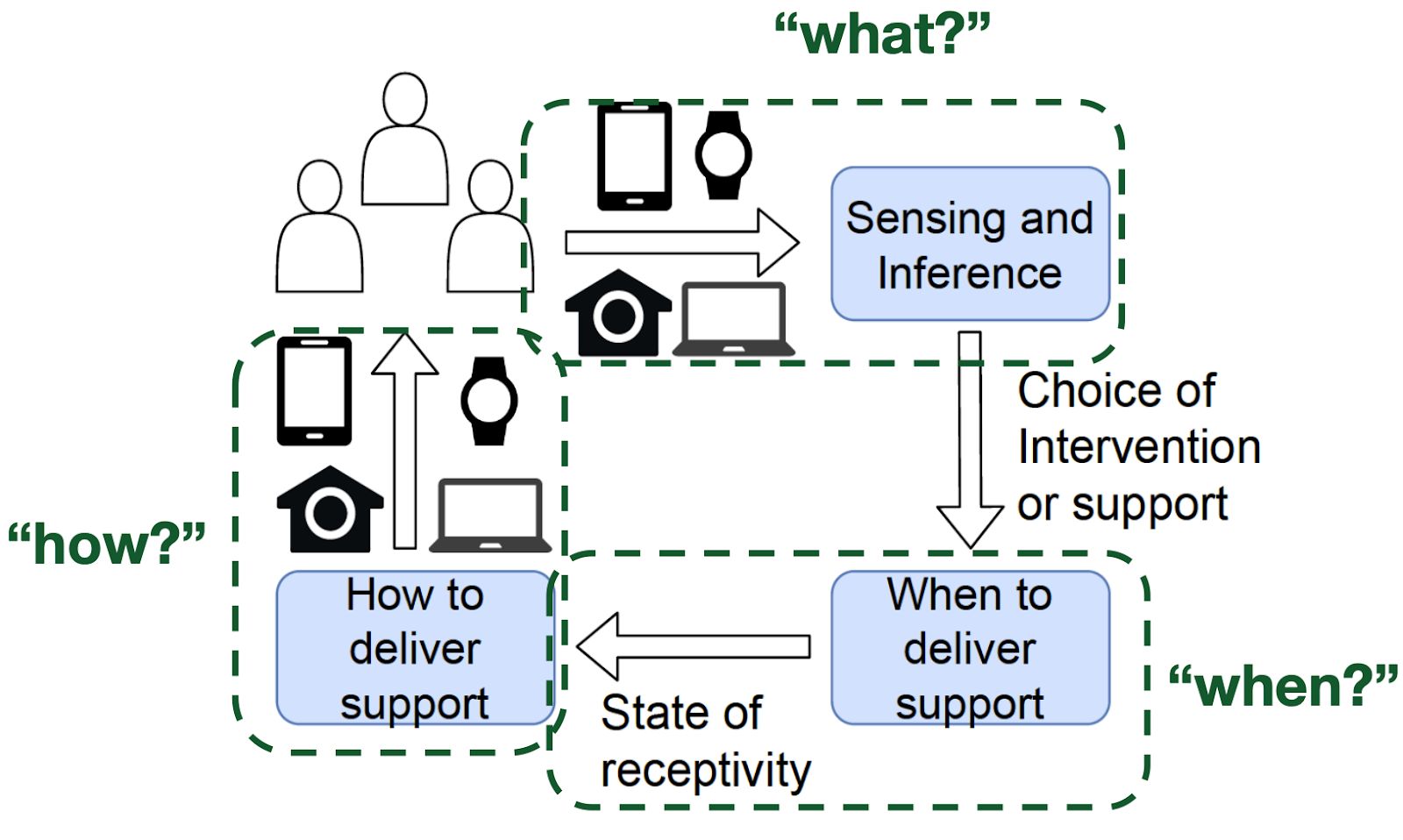

We work on exploring and advancing the complete "lifecycle" of mental- and behavioral-health sensing and intervention, which includes (a) accurately sensing and detecting a mental or behavioral health condition, like stress and opioid use; (b) after detecting a particular condition, determining the right time to deliver the intervention or support, such that the user is most likely to be receptive to the interventions provided; and (c) choosing the best intervention delivery mechanism and modality to ensure just-in-time delivery and reachability.

A simplified representation of the sensing to intervention lifecycle.

Current Projects

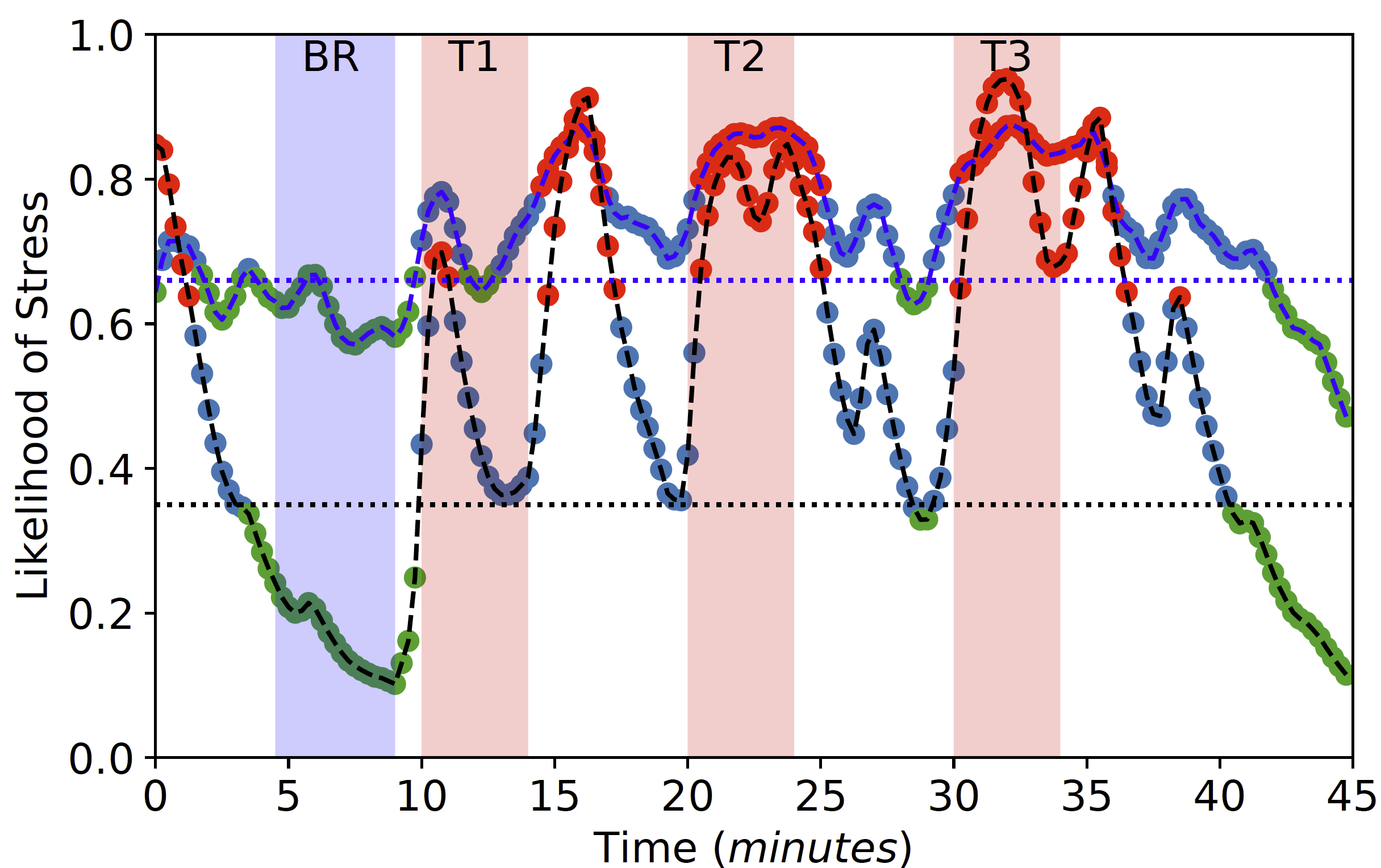

Causal modeling for physiological stress

We are working on various projects to leverage multimodal data and understand the contextual and behavioral factors that lead to physiological stress, and evaluate its association with the perception of stress.

Predicting relapse during OUD treatment

We are working on a longitudinal study to detect at-risk indicators, e.g., stress, craving, and mood, among patients undergoing Opioid Use Disorder (OUD) treatment, using passively collected contextual and sensor data from smartphones and wearables.

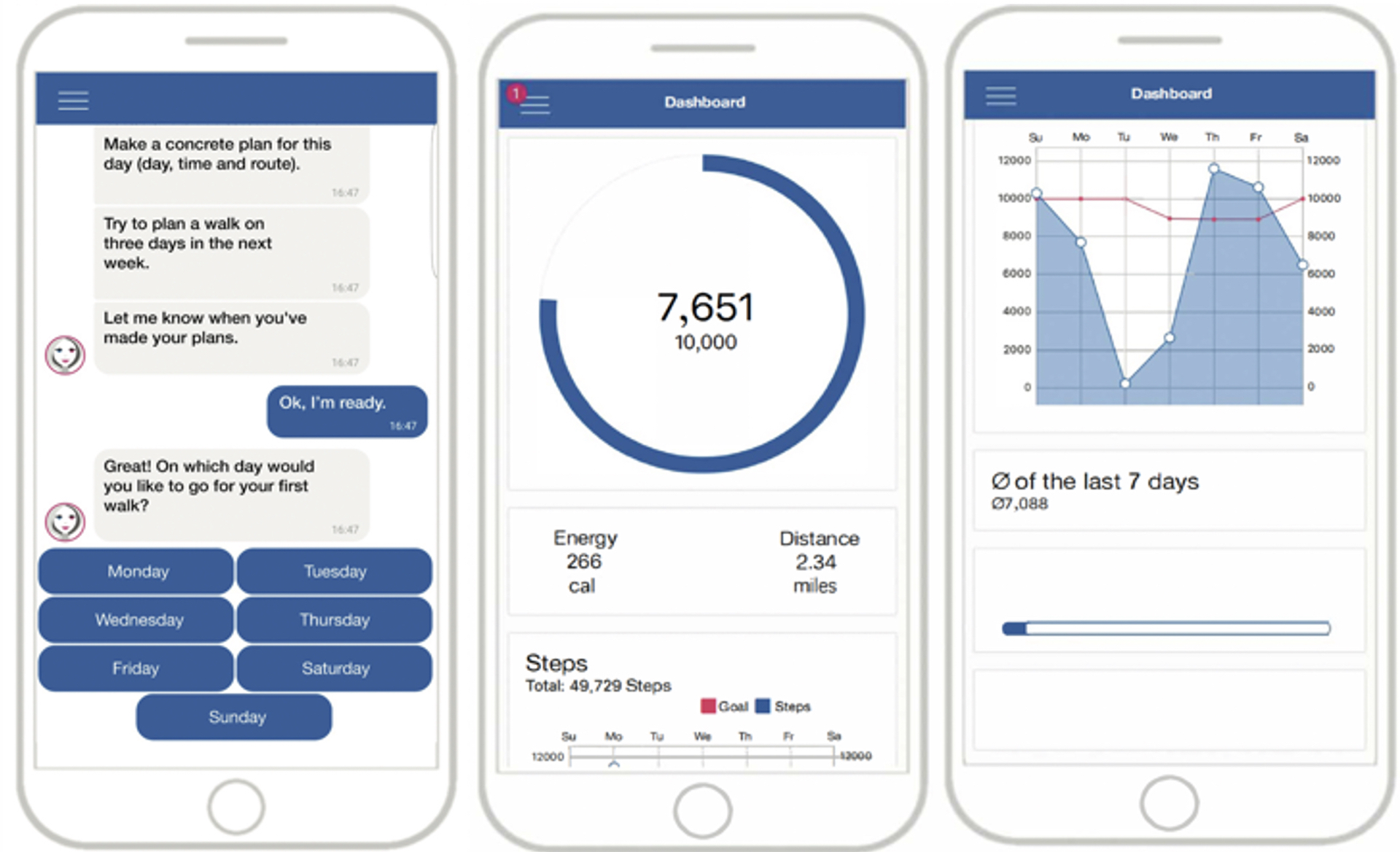

States-of-receptivity for digital health interventions

We have multiple projects currently underway to better evaluate the contexts where people are willing and able to engage with and use digital health interventions. These range from behavior-change interventions in free-living situations to interventions during specific scenarios, e.g., driving.

News

(October 2025) Varun gave three invited talks at IEEE BHI.

Varun was invited to give three talks at various special sessions and workshops at the International Conference on Biomedical Health Informatics (BHI) in Atlanta.

continue reading...

(October 2025) UbiWell Lab at UbiComp 2025

Several lab members attended UbiComp 2025 in Finland!

continue reading...

(October 2025) Two papers accepted at IMWUT 2025

We have two new papers accepted at IMWUT, to be presented at UbiComp 2026 in Shanghai!

continue reading...

(July 2025) Two papers accepted at IMWUT 2025

We have two new papers accepted at IMWUT, to be presented at UbiComp 2025 in Finland!

continue reading...

(July 2025) Varun participated in a panel discussion at IEEE ICDH

Varun was invited to participate in a panel discussion on “Harnessing the Power of AI to Enable Highly Personalized Anytime/Anywhere Mental Health and Substance Use Care” at the International Conference on Digital Health in Helsinki.

continue reading...

(June 2025) NSF CAREER Award for Just-in-Time Adaptive Interventions

We are thrilled to share that our lab has received the National Science Foundation (NSF) CAREER award to support our research on designing intelligent, context-aware systems for digital health interventions.

continue reading...

Recent Publications

Show allNatasha Yamane, Varun Mishra, Matthew S. Goodwin

arXiv 2025

Siyi Wu, Weidan Cao, Shihan Fu, Bingsheng Yao, Ziqi Yang, Changchang Yin, Varun Mishra, Daniel Addison, Ping Zhang, Dakuo Wang

Proceedings of the Conference on Human Factors in Computing Systems (CHI) 2025

Yuna Watanabe, Natasha Yamane, Aarti Sathyanarayana, Varun Mishra, Matthew S. Goodwin

UbiComp 2025 (MHSI Workshop) 2025

Veronika Potter, Ha Le, Uzma Haque Syeda, Stephen Intille, Michelle Borkin

In 2025 IEEE Visualization and Visual Analytics (VIS’25) 2025

Ha Le, Akshat Choube, Vedant Das Swain, Varun Mishra, Stephen Intille

Ubicomp 2025 (GenAI4HS Workshop) 2025